Running a website, especially on a self-hosted platform like a Synology NAS with WordPress, offers great flexibility. However, it also means you’re responsible for its security and integrity. How do you know if an unauthorized change has occurred in your website’s files? That’s where a file integrity monitoring tool comes in handy!

Today, I’ just implemented a simple yet effective Python script deploying on Synology NAS to automatically monitor WordPress (or any other critical) files for any changes.

The Background: Why Monitor File Changes?

Websites, particularly those powered by Content Management Systems (CMS) like WordPress, are dynamic. Files are constantly accessed and sometimes modified. While most changes are legitimate (e.g., updating a plugin, uploading a new image), malicious actors or accidental misconfigurations can also alter website’s core files. Detecting these unauthorized changes quickly is crucial for:

- Security: Identifying malware injections, defacement, or unauthorized backdoors.

- Integrity: Ensuring website’s content and functionality remain as intended.

- Troubleshooting: Pinpointing when and where a problem might have been introduced.

Our Goal: A Proactive Alert System

The primary goal of this script is to provide a proactive alert system. Instead of manually checking files, this script automates the process:

- Monitors Defined Directories: It scans specific directories where website’s core files and database reside (e.g., WordPress installation and MariaDB data folders).

- Calculates Hashes: For each file, it calculates a unique digital fingerprint (MD5 hash).

- Compares with Baseline: On subsequent runs, it compares the current hashes with a previously recorded “baseline.”

- Detects Changes: If a file’s hash has changed, or if new files appear, or if old files disappear, it flags them.

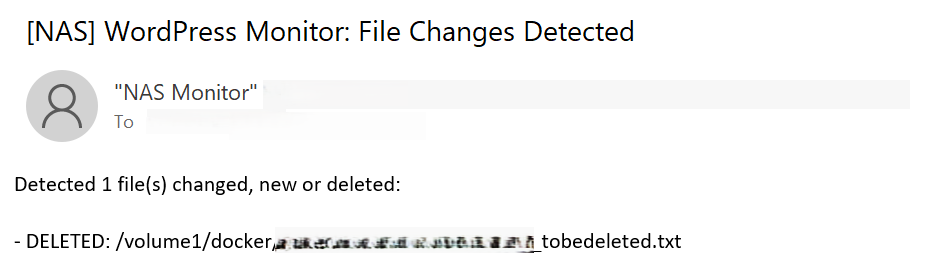

- Notification: Crucially, if any changes are detected, it sends an email notification detailing what changed, including the file path, size, and last modified timestamp.

The Benefits: Why This Approach?

- Simplicity & Transparency: The script is written in Python, making it relatively easy to understand and modify if I need to customize its behavior or integrate it with other systems.

- Early Detection: Catching unauthorized changes early can prevent major security breaches or data loss.

- Automation: Once set up in Synology’s Task Scheduler, it runs automatically in the background, freeing me from manual checks.

- Detailed Insights: The email notifications provide specific information about the changed files, helping me quickly identify and investigate the issue.

- Resource-Friendly: MD5 hashing is efficient, and the script only sends notifications when actual changes are detected, minimizing resource usage and email spam.

- Synology Native: Leverages Synology DSM’s built-in Python environment and Task Scheduler for seamless integration.

How It Works:

At its core, the script does the following:

- Configuration: it defines which folders to monitor, where to store its temporary files, and the email notification settings.

- Snapshot: It walks through all files in the specified directories (like WordPress and database folders), calculates a unique “fingerprint” (MD5 hash) for each file, and stores this as the “current state.” It intelligently skips temporary files, logs, and Git repositories to avoid false alarms.

- Comparison: It then loads the “last known good” state (my baseline) from a saved file.

- Identify Differences: By comparing the “current state” with the “last state,” it can tell if a file has been:

- Modified: The content has changed (hash is different).

- New: The file didn’t exist before.

- Deleted: The file existed before but is now gone.

- Alert: If any differences are found, it compiles a clear list of changes and sends it to the email address. It also logs these events for historical tracking.

- Update Baseline: Finally, the “current state” becomes the “last state” for the next check

Core Code

import os

import hashlib

import json

import datetime

import smtplib

from email.mime.text import MIMEText

from email.header import Header

# --- Configuration ---

TARGET_DIRS = [

dirs1 #"/volume1/docker/app1",

dirs2 #"/volume1/docker/app2"

]

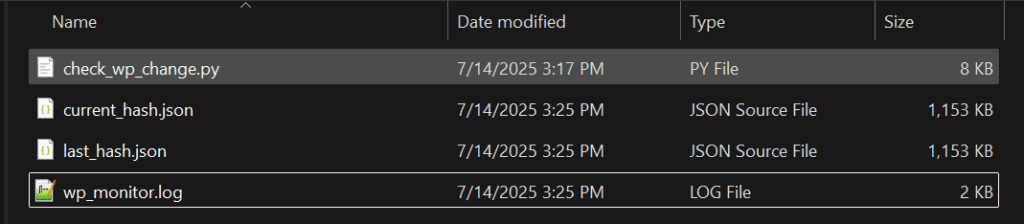

SCRIPT_DIR = dir3 #"/volume1/tool/wp_monitor_dir"

HASH_FILE = os.path.join(SCRIPT_DIR, "last_hash.json") # Using JSON for better structure

TMP_HASH_FILE = os.path.join(SCRIPT_DIR, "current_hash.json") # Using JSON for better structure

LOG_FILE = os.path.join(SCRIPT_DIR, "wp_monitor.log")

# Email settings

EMAIL_TO = "*@example.com"

MAIL_SUBJECT = "[NAS] WordPress Monitor: File Changes Detected"

SENDER_NAME = "NAS Monitor"

EMAIL_FROM = "your_nas_email@example.com" # !!! IMPORTANT: Replace with your actual sender email address !!!

# !!! IMPORTANT: SMTP server details for sending emails !!!

# For Gmail, you'd use:

SMTP_SERVER = "smtp.gmail.com"

SMTP_PORT = 587

SMTP_USERNAME = "your_nas_email@example.com" # !!! IMPORTANT: Your Gmail/SMTP username !!!

SMTP_PASSWORD = "your_app_password" # !!! IMPORTANT: Your Gmail App Password or SMTP password !!!

# Exclusions

EXCLUSIONS = [

".git", # .git directories

".log", # .log files (anywhere)

"cache", # directories named 'cache'

"ib_logfile", # MariaDB transaction log files (e.g., ib_logfile0, ib_logfile1)

]

# --- Helper Functions ---

def write_log(message):

"""Appends a timestamped message to the log file."""

timestamp = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

with open(LOG_FILE, "a", encoding="utf-8") as f:

f.write(f"{timestamp} - {message}\n")

def calculate_file_hash(filepath):

"""Calculates MD5 hash of a file."""

hasher = hashlib.md5()

try:

with open(filepath, 'rb') as f:

for chunk in iter(lambda: f.read(4096), b""):

hasher.update(chunk)

return hasher.hexdigest()

except Exception as e:

write_log(f"WARNING: Could not read file {filepath} for hashing: {e}")

return None

def is_excluded(filepath):

"""Checks if a file path should be excluded based on defined patterns."""

for exclusion in EXCLUSIONS:

if exclusion.startswith("."): # For hidden dirs/files like .git

if f"/{exclusion}/" in filepath or filepath.endswith(exclusion):

return True

elif exclusion.endswith("logfile"): # For ib_logfile pattern

if exclusion in os.path.basename(filepath):

return True

elif exclusion == "cache": # For 'cache' directory

if f"/{exclusion}/" in filepath:

return True

elif exclusion.startswith("*."): # For file extensions like *.log

if filepath.endswith(exclusion[1:]):

return True

return False

def send_email(subject, body):

"""Sends an email notification."""

try:

msg = MIMEText(body, 'plain', 'utf-8')

msg['From'] = Header(f'"{SENDER_NAME}" <{EMAIL_FROM}>', 'utf-8')

msg['To'] = Header(EMAIL_TO, 'utf-8')

msg['Subject'] = Header(subject, 'utf-8')

with smtplib.SMTP(SMTP_SERVER, SMTP_PORT) as server:

server.starttls() # Enable TLS encryption

server.login(SMTP_USERNAME, SMTP_PASSWORD)

server.sendmail(EMAIL_FROM, EMAIL_TO, msg.as_string())

write_log("Notification email sent successfully.")

except Exception as e:

write_log(f"ERROR: Failed to send email: {e}")

write_log(f"Subject: {subject}")

write_log(f"Body: {body}") # Log the body for debugging if send fails

# --- Main Logic ---

def main():

os.makedirs(SCRIPT_DIR, exist_ok=True) # Ensure script directory exists

write_log("Generating current file hashes...")

current_hashes = {}

for target_dir in TARGET_DIRS:

if not os.path.isdir(target_dir):

write_log(f"WARNING: Target directory not found: {target_dir}")

continue

for root, dirs, files in os.walk(target_dir):

# Modify dirs in-place to skip excluded subdirectories during os.walk

# This is more efficient than filtering individual files from excluded dirs

dirs[:] = [d for d in dirs if not is_excluded(os.path.join(root, d))]

for filename in files:

filepath = os.path.join(root, filename)

if not os.path.islink(filepath) and not is_excluded(filepath): # Skip symlinks and excluded files

file_hash = calculate_file_hash(filepath)

if file_hash:

current_hashes[filepath] = file_hash

# Save current hashes

with open(TMP_HASH_FILE, "w", encoding="utf-8") as f:

json.dump(current_hashes, f, indent=4)

# First run: initialize hash baseline

if not os.path.exists(HASH_FILE):

with open(HASH_FILE, "w", encoding="utf-8") as f:

json.dump(current_hashes, f, indent=4)

write_log("First run: baseline hash file created.")

return # Exit after first run, no changes to report yet

write_log("Loading previous hashes...")

old_hashes = {}

try:

with open(HASH_FILE, "r", encoding="utf-8") as f:

old_hashes = json.load(f)

except (FileNotFoundError, json.JSONDecodeError) as e:

write_log(f"WARNING: Could not load previous hash file ({HASH_FILE}): {e}. Assuming no previous baseline.")

changed_files_metadata = []

write_log("Comparing current and previous hashes...")

# Check for modified and new files

for filepath, current_hash in current_hashes.items():

if filepath not in old_hashes:

# New file

try:

mod_time = datetime.datetime.fromtimestamp(os.path.getmtime(filepath)).strftime("%Y-%m-%d %H:%M:%S")

file_size = os.path.getsize(filepath)

changed_files_metadata.append(f"- NEW: {filepath} (Created/Modified: {mod_time}, Size: {file_size} bytes)")

except FileNotFoundError:

changed_files_metadata.append(f"- NEW: {filepath} (Metadata unknown - file may have been deleted immediately)")

elif old_hashes[filepath] != current_hash:

# Modified file

try:

mod_time = datetime.datetime.fromtimestamp(os.path.getmtime(filepath)).strftime("%Y-%m-%d %H:%M:%S")

file_size = os.path.getsize(filepath)

changed_files_metadata.append(f"- MODIFIED: {filepath} (Modified: {mod_time}, Size: {file_size} bytes)")

except FileNotFoundError:

changed_files_metadata.append(f"- MODIFIED: {filepath} (Metadata unknown - file may have been deleted immediately)")

# Check for deleted files

for filepath in old_hashes:

if filepath not in current_hashes:

# Deleted file

changed_files_metadata.append(f"- DELETED: {filepath}")

if changed_files_metadata:

write_log(f"{len(changed_files_metadata)} file(s) changed, new or deleted:")

for item in changed_files_metadata:

write_log(item)

email_body = f"Detected {len(changed_files_metadata)} file(s) changed, new or deleted:\n\n"

email_body += "\n".join(changed_files_metadata)

send_email(MAIL_SUBJECT, email_body)

else:

write_log("No file changes detected.")

# Update hash file for next run

write_log("Hash file updated for next run.")

with open(HASH_FILE, "w", encoding="utf-8") as f:

json.dump(current_hashes, f, indent=4)

if __name__ == "__main__":

main()Script file, log file and files storing hash info: